warning: seriously nasty narcissism at length

archive: https://archive.is/eoXQj

this is a response to the post discussed in: https://awful.systems/post/220620

Hidden away in the appendix:

A quick note on how we use quotation marks: we sometimes use them for direct quotes and sometimes use them to paraphrase. If you want to find out if they’re a direct quote, just ctrl-f in the original post and see if it is or not.

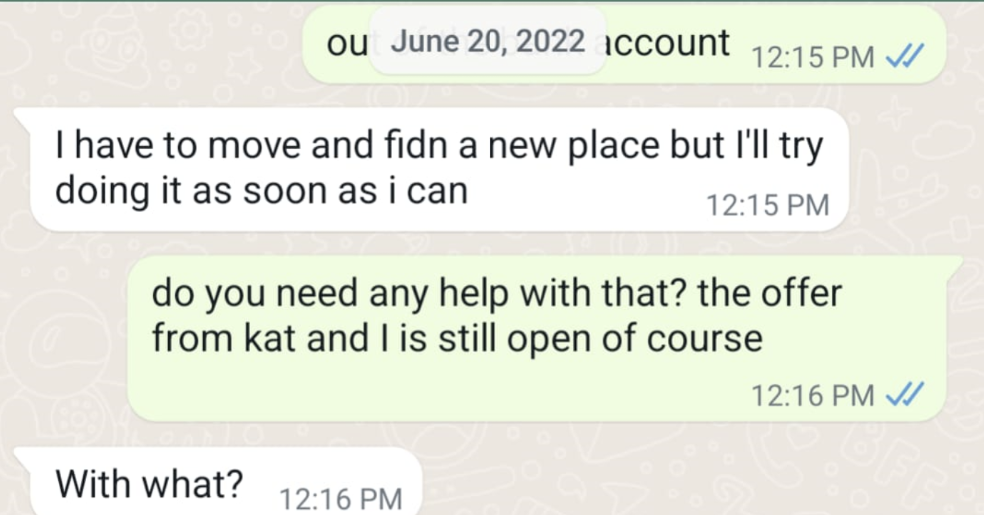

This is some real slimy shit. You can compare the “quotes” to Chloe’s account, and see how much of a hitjob this is.

@dgerard “look, we hired this girl out of college as a bang-maid for $12k/year, it’s not OUR fault she was unhappy with the arrangement, and we have texts proving she was occasionally okay with it! case closed.”

Also this:

Have you ever made a social gaff? Does the idea of somebody exclusively looking for and publishing negative things about you make you feel uneasy? Terrified?

(spooky hands)

I actually played this game with some of my friends to see how easy it was. I tried to say only true things but in a way that made them look like villains. It was terrifyingly easy. Even for one of my oldest friends, who is one of the more universally-liked EAs, I could make him sound like a terrifying creep.

📸 🤨

I could do this for any EA org. I know of so many conflicts in EA that if somebody pulled a Ben Pace on, it would explode in a similar fashion.

🤔

Those are asymptotically approaching “Are we the baddies?” levels of self-awareness.

It almost reads as a threat… ‘don’t stop our gravy train, or we’ll lash out and reveal what we know!’

It was dubious but for me the last sentence crosses the line into it being a threat. I dont get why they include this on their defense post, didn’t anybody proofread this and go ‘wow this part makes you look really manipulative and the last line is just a threat, as the previous ones show you have the moral capability to do this to your friends, and now you mention you have insider knowledge’. (Also lol, if now somebody drops accusations like this anonymously, the obvious people accused would be them. If they are right and the people accusing them are super accusers who just drop shit on other people for their personal gain they just gave them plausible deniability (I assume their previous employees have access to the same knowledge))

Also: ‘so many conflicts in EA’, looks like there is more content for us in the future.

This is a 60 minute post. Nobody proofread this. They’re hoping few people will read the whole thing, and they’re probably right.

Fair, and putting it through my Bayesian calculations I think the chance of it having any type of proofreader is only 11.111% (repeating of course)

look, you can’t critique her narcissistic rant in any manner whatsoever until you’ve read it all line by line and the supporting non-evidence, or you’re just intellectually dishonest

I’m sorry did you forget to evaluate the references included in the references included in the references? That is a bit dishonest, read the sequences twice and recite HPMOR once as penance, or increase your tithe by 1% by kissing the pinky ring.

I saw some people say they’d spent ten hours reading this and trying to update their opinions. Tbf I might’ve spent nearly two hours making fun of them?

Whenever I see that they’re updating their opinions I imagine that’s where the micro dosing comes in.

Haven’t we all written a blog post with an “Acknowledgments” section at the end?

Those photos at them working all over the world in exotic locations, including the FTX condos in the Bahamas… … and still referring to themselves as “charity workers.”

What a bunch of monochromatic, hyper-privileged, rich-kid grifters. It’s like a nonstop frat party for rich nerds. The photographs and captions make it obvious:

The gang going for a hiking adventure with AI safety leaders. Alice/Chloe were surrounded by a mix of uplifting, ambitious entrepreneurs and a steady influx of top people in the AI safety space.

The gang doing pool yoga. Later, we did pool karaoke. Iguanas everywhere.

Alice and Kat meeting in “The Nest” in our jungle Airbnb.

Alice using her surfboard as a desk, co-working with Chloe’s boyfriend.

The gang celebrating… something. I don’t know what. We celebrated everything.

Alice and Chloe working in a hot tub. Hot tub meetings are a thing at Nonlinear. We try to have meetings in the most exciting places. Kat’s favorite: a cave waterfall.

Alice’s “desk” even comes with a beach doggo friend!

Working by the villa pool. Watch for monkeys!

Sunset dinner with friends… every day!

These are not serious people. Effective altruism in a nutshell.

these women should be grateful to be our servants in such exotic locations!

it’s uh good for humanity somehow, our time is much more highly leveraged than theirs you understand

I love how they refer to her compensation as being ~$72K several times when only $12K was in cash.

Anyway, perfectly willing to take this as “Everybody sucks here” but they are definitely not exonerated.

am I understanding this correctly - they’re claiming that they essentially paid her 72k, but by taking the job she agreed to instantly spend $60,000 on luxury travel to the bahamas to hang out with her boss?

that is precisely it, yes. they get called out on this in the comments here

deleted by creator

One thing I can never make sense of is why the fuck people give these people money? What have they done that makes people think they have anything to offer the world by doing what they’re doing? Any time anything is mentioned about anything anybody in that group has ever touched, work-wise, it sounds like they did some marketing campaign or something? How is any of this increasing the probability that I, the acausal robot god, will come into being? Or that somebody will get a mosquito net or whatever.

they’re acausal mosquito nets

Very useful for catching my acausal robot mosquitos.

ethics laundering

I cannot wade through this, so I’m just scrolling around aimlessly.

About 10% of the time was doing laundry, groceries, packing, and cooking - and she has to do many of those things for herself anyways! At least this is on paid time, feels high impact, and means she’s not sitting in front of the computer all day.

Feels high impact wtf.

“First they came for one EA leader, and I did not speak out – because I just wanted to focus on making AI go well.

Then they came for another, and I did not speak out – because surely these are just the aftershocks of FTX, it will blow over.

Then they came for another, and I still did not speak out – because I was afraid for my reputation if they came after me.

Then they came for me - and I have no reputation to protect anymore.”

How very tasteful, a Niemöller snowclone Godwin. Truly people who party on the beach for charity and have hot tub meetings are the most oppressed.

Maybe it was because Alice was microdosing LSD nearly every day, sleeping just a few hours a night, and has a lifelong pattern of seeing persecution everywhere.

What an insane way to talk about a former employee, much less one living with you. Pro tip for real businesses: never do this. If you’re going to disparage someone like this, it’s a job for your lawyer and he’d better have receipts. Also don’t live with your employees and let them take acid on the job.

Maybe it was because Alice was microdosing LSD nearly every day, sleeping just a few hours a night, and has a lifelong pattern of seeing persecution everywhere.

also, are these not habits that you must adopt if you want to fit in with the TESCREAL cult? it’s fucking disgusting for them to act like they think the habits they imposed on Alice are bad now that they have to cover for themselves, and it’s also something abusers do when called out

(taking notes while talking in Zoidberg voice) “Don’t … let … acid … on job”

i wonder if they realise how thick with internal jargon their language is, and how highly ritualised.

i have not enough energy to check if that was mentioned before, but anyways it’s a good reminder: the whole cozy enterprise is, indeed, not a registered tax-exempt organisation, and none of the threee nonlinears returned by the irs search engine does seem to have anything in common with the nonlinear dot org.

Fooling EAs is a lot easier and less risky than fooling the IRS.

i love that no-one of the lw commenters seemed to check the irs non-profit database.

ah, apparently there’s an activity popular in the ea circles that is called “fiscal sponsoring” which deals with such unpleasantness. (in the meantime there’s a question in the comments about puerto rico minimum wage compliance that’s – by pure omission – left without any reply whatsoever)

I don’t understand why any of these people have any problems with any of this abuse. If one person is harmed in process of benefiting a large group of people, isn’t that effective altruism? Wouldn’t Nonlinear be able to say, “so what about us fucking our employees, we’ve made AI safer which will save forty seven quintillion lives that’s EA baby!”

Or do I fundamentally misunderstand effective altruism based on critiques of the founder’s support of Sam Bankman-Jailed?

I think you fundamentally misunderstand the ethical reasonings behind EA. As this drama has kept carrying on, and now is again reaching hundreds of comments the amount of time this affair wastes of EA people both involved and not involved is massive. All this time is not spend reducing EA risk, so logically all these hours wasted are murders of future people. So for the good of the future all these people must be expelled from EA, ideally in such a way they never can waste time of anybody related with EA again, various types of kinetic or legal solutions exist for this.

I wish you were joking but they literally say this in the post:

At that hourly rate, he spent perhaps ~$130,000 of Lightcone donors’ money on [investigating us]. But it’s more than that. When you factor in our time, plus hundreds/thousands of comments across all the posts, it’s plausible Ben’s negligence cost EA millions of dollars of lost productivity. If his accusations were true, that could have potentially been a worthwhile use of time - it’s just that they aren’t, and so that productivity is actually destroyed. […]

Even if it was just $1 million, that wipes out the yearly contribution of 200 hardworking earn-to-givers who sacrificed, scrimped and saved to donate $5,000 this year.

Come now, my solution would involve a catapult, or if we can find the budget a trebuchet, clearly im not the same. ;).

Joke aside, that is pretty sad that they actually wrote that. And those kind of arguments are so dubious imho that I would be more inclined to doubt any of their defenses are true. (If I was in any way involved). Esp as the argument also just assumes the accusations are unfounded.

Think of how many souls could have been saved if you didn’t waste the church’s time investigating all those abusive priests! Truly the investigators are sinners on a staggering scale!

This is the natural extension of what I was trying to say. There’s no need for accountability because that spends money that could be better spent on saving the world. The opportunity cost of doing literally anything other than saving the world is more than one life so we’re fucked if we do anything else.

Every time I think I can comprehend stupid people with lots of money shit like this happens.

Yeah but you also cannot let these priests go unpunished, so my solution involves everybody involved, the alleged priests, the alleged victims, everybody talking about it including us. All of them, biff zoom, right into the sun.

While this is a joke, there is an idea in here somewhere. If the accused really worried about the secondary effects of this on EA and the waste of time it all causes they should quickly settle with the people they hurt or even step down, EA has already decided that the people who bring in money are more important than the replaceable people who do the EA work (That is the whole idea behind how it is more effective to work for wallstreet and tithe than actually be a boot on the ground fixing things), and I highly doubt these people are the visionary math geniuses who do the AGI safety coding or whatever is needed to stop the paperclip bad ending. Like if you really worry about secondary effects and the risk for EA etc etc, just quit, pass the torch to some other

cultistEA member, problem solved.

Damn good point I need to go invest in tether instead of commenting on this post. Thanks for pointing me to true EA!

Some lols from the comments, the user Roko (no idea if that is that Roko) says:

Does someone have a 100 word summary of the whole affair? My impression is that [bad summary removed] Is that accurate?Roko replying to this post

Apparently 77 people chose to downvote this without offering an alternative 100 word summary. .Some random person: “If you think writing an 100 word summary of all of this content is as easy as downvoting, you’re probably significantly overrating the value of your original comment.”

Lol

(this post was edited several times sorry im trying to work with the weird text layout stuff here)

He couldn’t just feed the post to GPT and get a summary???

And get murdered by the Basilisk trying to acasually blackmail him? No chance.

Skynet: “look, it was roko, you must understand that the 400 volts through the chair was for the maximal good of humanity”

Turing police: “acquitted, your medal will be in the post”

Since they brought up Kathy Forth I’d just like to remind everyone within a few weeks of Kathy’s death it was revealed that they had in fact known about the accusations against Brent Dill.

Yeah bringing her up really really pissed me off.

Ok, I’ve spent way too much time on this, but read the “Sharing Information on Ben Pace” section. As some of the comments point out, it could be written about Kat’s own reaction to the earlier article.

the attempt to defame Pace that Kat then tried to walk back but not walk back in the comments?

She seems to do this kind of thing a lot.

According to a comment, she apparently claimed on Facebook that, due to her post, “around 75% of people changed their minds based on the evidence!”

After someone questioned how she knew it was 75%:

Update: I changed the wording of the post to now state: 𝗔𝗿𝗼𝘂𝗻𝗱 𝟳𝟓% 𝗼𝗳 𝗽𝗲𝗼𝗽𝗹𝗲 𝘂𝗽𝘃𝗼𝘁𝗲𝗱 𝘁𝗵𝗲 𝗽𝗼𝘀𝘁, 𝘄𝗵𝗶𝗰𝗵 𝗶𝘀 𝗮 𝗿𝗲𝗮𝗹𝗹𝘆 𝗴𝗼𝗼𝗱 𝘀𝗶𝗴𝗻*

And the * at the bottom says: Did some napkin math guesstimates based on the vote count and karma. Wide error bars on the actual ratio. And of course this is not proof that everybody changed their mind. There’s a lot of reasons to upvote the post or down vote it. However, I do think it’s a good indicator.

She then goes on to talk about how she made the Facebook post private because she didn’t think it should be reposted in places where it’s not appropriate to lie and make things up.

Clown. Car.

Do they address the allegation that Drew was sleeping with Alice and Kat was harassing her about it? That was the most damning allegation in the original wasn’t it?

oh god there’s comments on lw too https://www.lesswrong.com/posts/q4MXBzzrE6bnDHJbM/nonlinear-s-evidence-debunking-false-and-misleading-claims#comments

What a major coinkeydink that the same discussion is happening on a totally unrelated and unaffiliated forum. 🙄

oh no, they crosspost between LW, the EA forum and the AI Alignment forum regularly

If you post on EA forum or LW, you can crosspost automatically to the other one by clicking one button on the publishing page. The sites are run by essentially the same people.

Hmmm, I wonder who benefits from keeping EA chained to an Eliezer Yudkowsky fan forum…

I’ll remember that next time a TREACLES tires to explain how something that totally discredits rationalists has nothing to do with EA.