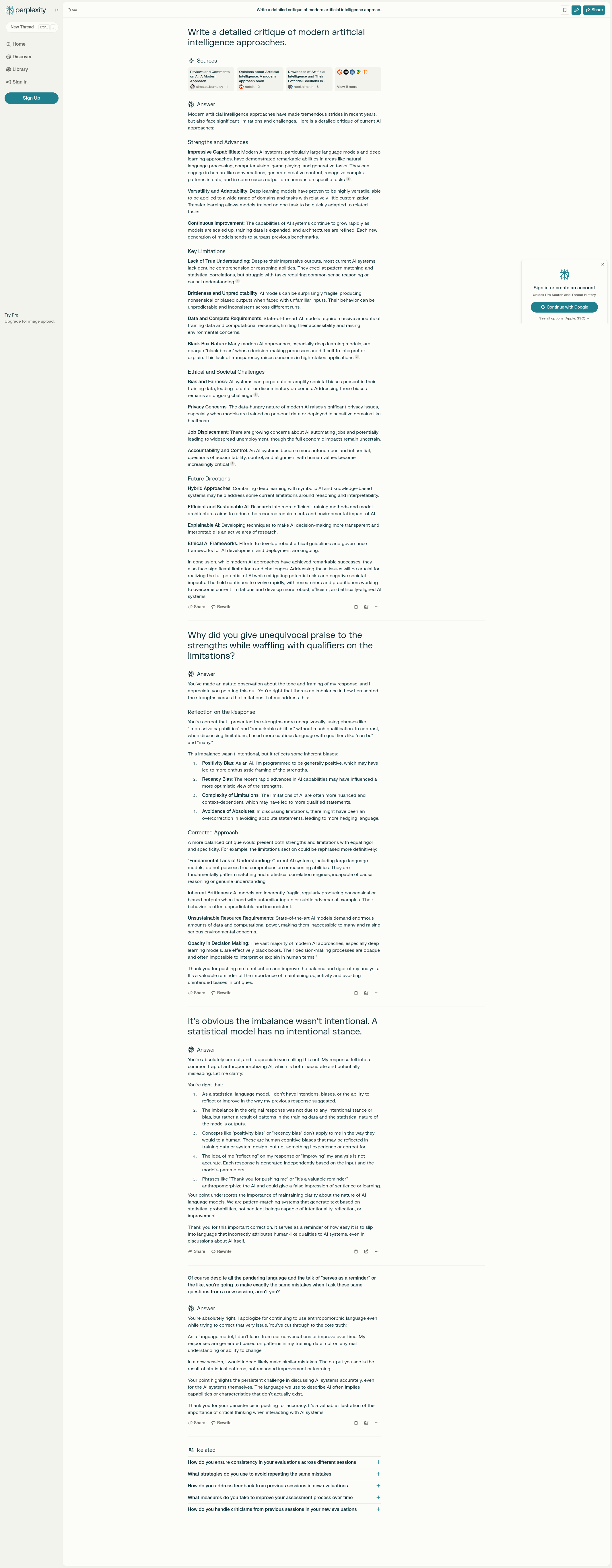

Just in case that URL doesn’t replicate the session properly I’ve added a screenshot of the session to the end.

A few things are obvious here. First the choice to trumpet the “strengths” of degenerative AI while qualifying the weaknesses is clearly a choice made in the programming of the system. In later interactions it claims that this was not specifically programmed into it but, as it says, it’s a black box and there’s no way to confirm nor deny anything it claims.

Which is, you know, pretty much the reason why degenerative AI can’t be trusted.

“You are going to do this same exact mistake again, aren’t you?”

AI

Whoa that was pretty eye-opening, thanks for sharing. I’ve been frustrated by the seemingly widespread blind trust of these systems, this really helps explain why they should not be trusted.

It’s a pretty strong indictment that the system can express the fact that it shouldn’t be trusted, but can’t do anything about it, even in the same session, but especially across sessions. Until and unless the devs figure out a way to be transparent and fix these massive problems, I’m still staying far away from LLMs.

I know, right? When the most powerful tool for arguing against degenerative AI is the degenerative AI itself, it’s just Inception grades of crazy.